Did you know?

Over 40% of internet traffic comes from web crawlers.

Web crawlers collect data and help search engines index your site. They also strengthen your security.

The internet is like a library where tiny, tireless crawlers run from shelf to shelf. They read every book, take notes, and organize them neatly. That’s what web crawlers do on the internet. They scan websites, collect data, and make it easier for search engines to show you what you need.

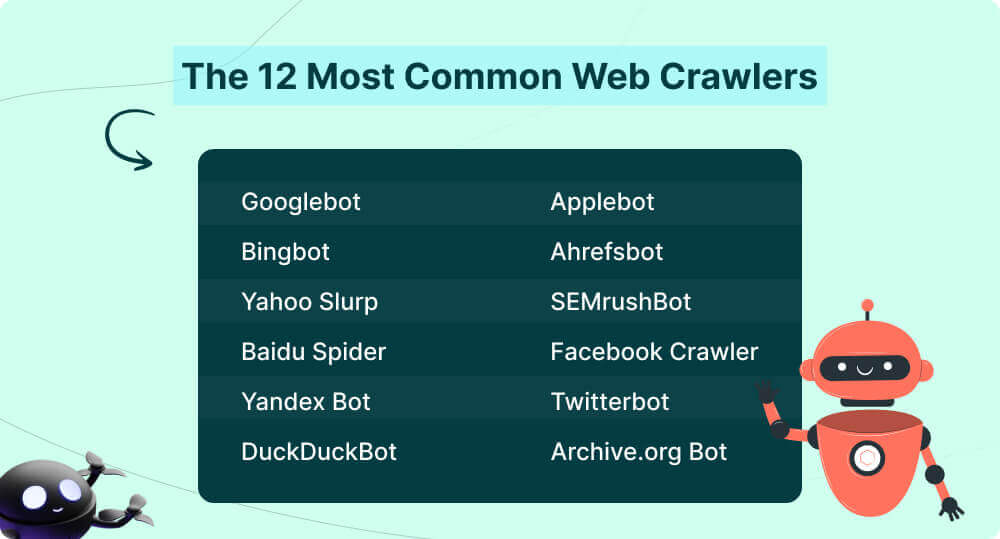

This guide will unlock the secrets of the 12 most common web crawler list. You’ll learn their roles, and how they impact your website. Also, here are some actionable tips to optimize your site while safeguarding it.

Let’s dive in!

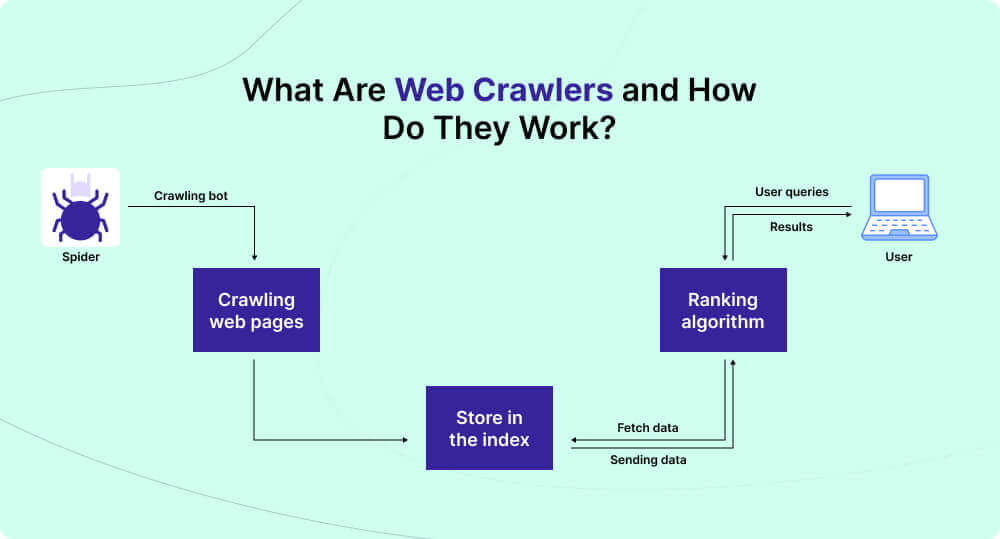

What Are Web Crawlers and How Do They Work?

Do you know why web crawler matters? It helps you find what you are searching for in seconds. Web crawlers are also called bots or spiders. These are programs that explore the web nonstop. They have three main jobs:

Crawling: They walk through websites and look for content.

Indexing: They sort and save the content into categories, just like a librarian.

Serving: They deliver this organized information when someone searches online.

Googlebot is one of the most famous crawlers. Others, like AhrefsBot, assist businesses to improve their SEO. Even history gets a hand with Archive.org Bot to preserve old web pages for the future.

But remember, not all crawlers are friendly. Some may disrupt your site like pesky flies. Learning how they work helps you welcome the helpers and block the troublemakers.

Why Knowing Most Common Web Crawlers is Essential?

Your website contains your valuable content, services, and ideas. The web crawlers constantly visit and deliver news about your website to the world. Some crawlers boost your visibility. But some try to steal your secrets. Wouldn’t you want to know who’s doing what?

Web crawlers are more than just visitors. They decide how well your site performs in search engines. When you understand them, you unlock powerful tools to make your website shine

Boost SEO: Friendly crawlers, like Googlebot. This helps your site rank higher by indexing your pages efficiently.

Protect Performance: Too many bots can slow your site down. knowing your crawl budget ensures the right bots visit without causing chaos.

Stay Secure: Not all crawlers are knights. Malicious bots can steal data. Spotting and blocking them keeps your site safe.

Sharpen Content: Web crawlers highlight your most important pages. This ensures they can show the best of your website to the world.

Now, let’s explore the 12 most common web crawlers list!

The 12 Most Common Web Crawlers List

1. Googlebot

Googlebot scans and indexes websites to make them searchable on Google. Without it, your site won’t appear in search results. This web crawler is owned by Google.

There are two main versions, Googlebot Smartphone and Googlebot Desktop. The mobile version takes priority because Google uses mobile-first indexing. This means Google evaluates your site primarily as mobile users would experience it.

Googlebot follows the instructions in your robots.txt file. You can use this file to block it from crawling certain pages or resources. However, blocking a page from crawling doesn’t remove it from Google’s index. For that, you need a noindex directive. For this do the following:

<meta name=”googlebot” content=”noindex, nofollow”>

One important limitation is that Googlebot processes only the first 15 MB of an HTML file. This applies to any text-based file. Anything beyond that won’t be crawled or indexed. So, if your critical content is buried deep in the file, it might not get indexed.

Googlebot’s user-agent can be spoofed. It means you might see fake requests pretending to come from it. To confirm a request is real, do a reverse DNS lookup or compare the IP address with Google’s published IP ranges.

To ensure better crawling and indexing, keep your key content easy to access. Minimize unnecessary blocking, and make sure your mobile site performs well. When you get this right, you give your site the best chance to rank well on Google Search.

For detailed guidance on managing Googlebot, visit the official documentation.

Optimization Tips:

- Make your website mobile-friendly.

- Speed up loading times using tools like PageSpeed Insights.

- Submit an XML sitemap to Google Search Console.

- Avoid duplicate content by setting up canonical tags.

✅ User-Agent: Googlebot

✅ User Agent String: Check here.

2. Bingbot

Bingbot helps discover and index web pages for Bing’s search engine. If Bingbot doesn’t crawl your site, it won’t appear in Bing’s search results. It is owned by Microsoft.

Bingbot uses smart algorithms to prioritize what it crawls. It looks at signals like content relevance, freshness, and importance. These factors help Bingbot decide which pages to visit first. This helps to ensure that Bing’s search results stay up-to-date and accurate.

You can control Bingbot’s activity through your robots.txt file. But remember, blocking crawling doesn’t remove pages from Bing’s index. For that, you’ll need to use the noindex tag or remove the page altogether. Use the following:

<meta name=”bingbot” content=”noindex”>

Bing Webmaster Tools gives you even more control. It lets you adjust Bingbot’s crawl rate. If Bingbot is consuming too many server resources, you can slow it down. Conversely, if you want faster crawling, you can increase its rate.

Efficiency is a key focus for Bingbot. It tries to avoid unnecessary crawls by prioritizing changes. For example, Bingbot won’t repeatedly crawl unchanged pages. This helps save your server resources.

To ensure Bingbot works well with your site, keep your sitemap updated and clean. Provide clear navigation on your site. Avoid techniques that block or confuse crawlers. This includes using excessive JavaScript.

For deeper insights visit Bing Webmaster Tools.

Optimization Tips:

- Register on Bing Webmaster Tools.

- Add structured data (schema markup) to your site.

- Keep your content fresh and links functional.

- Use SSL for a secure browsing experience.

✅ User-Agent: Bingbot

✅ User Agent String: Check here

3. Yahoo Slurp

Yahoo Slurp is Yahoo’s web crawler. Its job is to explore the web and index pages for Yahoo Search. Without Slurp crawling your site, it won’t appear in search results.

Slurp works under Yahoo’s guidelines and respects the robots.txt standard. To avoid overwhelming your server, Yahoo limits Slurp’s crawling frequency. It doesn’t allow excessive crawling. You can adjust the crawl rate via Yahoo’s webmaster tools if needed.

Yahoo Slurp works alongside Bingbot. Because Yahoo’s search results are powered by Bing. Focus on Slurp’s crawling and indexing practices. This will improve your site’s performance in Yahoo search. Also, Keep your content relevant and easy to access.

You control Slurp’s access through your robots.txt file. This file can instruct Slurp on which pages to crawl or block. For example, if you want to prevent Slurp from reading pages on your site, add:

User-agent: Slurp

Disallow: /cgi-bin/

Blocking Slurp in robots.txt only prevents crawling. It doesn’t remove the page from Yahoo’s index. To truly exclude pages from Yahoo’s search results, use the noindex meta tag.

For further management, verify legitimate requests from Slurp. Check the IP against Yahoo’s official list. This can help ensure your site isn’t being misused by fake bots.

To maximize your site’s visibility on Yahoo, ensure your content is well-structured. Optimize how Slurp interacts with your pages.

Optimization Tips:

- Keep content error-free and up-to-date.

- Ensure compatibility with Yahoo ad networks.

✅ User Agent String: Check here

4. Baidu Spider

BaiduSpider is Baidu’s web crawler. It powers China’s largest search engine. This crawler does this by discovering and indexing content from websites. If your site targets the Chinese audience, optimizing for BaiduSpider is important.

This bot crawls pages to include them in Baidu’s search results. Like other search engine crawlers, it respects the robots.txt file. You can use this file to control BaiduSpider’s access to your site. For example, you can block it from crawling certain directories:

User-agent: BaiduSpider

Disallow: /private-directory/

BaiduSpider has a few unique behaviors. It focuses more on static content than dynamic or JavaScript-heavy pages. To ensure proper indexing, your content should be accessible in HTML format. The crawler favors websites hosted in China or those with a .cn domain. This improves loading times.

BaiduSpider does not fully follow modern crawling standards. It observes basic directives in robots.txt. However, advanced directives may not work as expected. Keeping things straightforward is important.

Baidu provides tools for webmasters. It monitors and optimizes its site’s performance in its search engine. These tools help track how BaiduSpider interacts with your site. They also help you fix potential crawling issues.

If you’re targeting Chinese users, make sure your site aligns with Baidu’s preferences. Focus on fast-loading pages and mobile-friendly designs. Also, locally hosted content for the best results.

Optimization Tips:

- Use Simplified Chinese for your content.

- Host your site on servers close to China.

- Avoid excessive JavaScript.

- Obtain an Internet Content Provider (ICP) license for better indexing.

✅ User-Agent: Baiduspider

✅ User Agent String: Check here

5. Yandex Bot

YandexBot is the web crawler for Yandex, Russia’s leading search engine. Its primary role is to scan and index web pages. This ensures they are available in Yandex’s search results.

Yandex offers a suite of webmaster tools. These tools provide insights into how YandexBot interacts with your site. You can monitor crawling activity and identify potential issues. You can also optimize your site’s performance in Yandex’s search results. Regularly reviewing these insights can help maintain and improve your site’s visibility.

Some bots may impersonate YandexBot using its user-agent string. To verify if a visitor is genuinely YandexBot, perform a reverse DNS lookup on the IP address. Authentic YandexBot hostnames end with yandex.ru, yandex.net, or yandex.com. After obtaining the hostname, conduct a forward DNS lookup. Ensure it matches the original IP address. This two-step verification confirms the visitor’s authenticity.

To manage YandexBot’s access to your website, you can use a robots.txt file. This file provides instructions on which parts of your site the bot can or cannot crawl. For example, to block YandexBot from accessing a specific directory, you would include:

User-agent: YandexBot

Disallow: /private-directory/

YandexBot is essential for indexing your website on Yandex. Understanding how YandexBot operates to Manage its access can enhance your site’s presence. It will improve your visibility in Yandex’s search results.

Optimization Tips:

- Optimize for Russian keywords and Cyrillic text.

- Use Yandex Webmaster tools to monitor your site.

- Improve loading speed.

- Add regional tags to target local audiences.

✅ User-Agent: Yandexbot

✅ User Agent String: Check here

6. DuckDuckBot

DuckDuckBot is DuckDuckGo’s official web crawler. It helps the privacy-focused search engine gather and index content from websites. This bot is crucial for DuckDuckGo’s search results. It helps build accurate results. At the same time, it maintains user privacy.

DuckDuckBot doesn’t track users, unlike other bots tied to advertising platforms. It focuses on delivering relevant results without leveraging personal data. This aligns with DuckDuckGo’s philosophy of prioritizing user privacy.

DuckDuckBot prioritizes fast-loading, mobile-friendly pages. If your site is cluttered or relies heavily on JavaScript, it may face crawling difficulties. Ensuring clean HTML can improve indexing. Optimizing your site’s speed can also help.

When DuckDuckBot visits your website, it identifies itself with the user-agent string. This string appears in your server logs. It shows that DuckDuckBot has accessed your site.

To manage DuckDuckBot’s interaction with your site, use the robots.txt file. This file lets you specify what parts of your site the bot can access. For example, to prevent DuckDuckBot from crawling a specific directory include the following:

User-agent: DuckDuckBot

Disallow: /private-directory/

It’s important to note that some malicious bots may impersonate DuckDuckBot by using its user-agent string. To verify if a visitor is genuinely DuckDuckBot, you can use the DuckDuckBot API. This API allows you to check if an IP address is officially used by DuckDuckBot to crawl the web, including your website.

Optimization Tips:

- Avoid trackers and intrusive ads.

- Use simple, clean HTML layouts.

- Ensure quick page load times.

✅ User Agent String: Check here

7. Applebot

Applebot supports search features like Siri, Spotlight, and Safari Suggestions. If you want your content to appear across Apple’s ecosystem, understanding Applebot is essential. This web crawler is owned by Apple.

Applebot collects data from websites to provide search results and improve Apple’s AI. It uses a user agent that identifies itself.

Applebot renders web pages to interpret their content accurately. If you block critical resources like CSS, JavaScript, or images, Applebot may not fully understand your site. Ensure these elements are accessible for effective crawling and indexing.

Applebot also gathers publicly available data for Apple’s AI models. If you prefer your content not to be included, update your robots.txt file accordingly.

You can verify its authenticity through a reverse DNS lookup. You should check if the hostname resolves to applebot.apple.com. This confirms it’s Applebot accessing your site.

Applebot respects your robots.txt directives. If specific rules for Applebot are missing, it follows instructions for Googlebot. To block Applebot from specific areas of your site, you can use:

User-agent: Applebot

Disallow: /example-path/

Applebot plays a key role in making your site discoverable on Apple platforms. Ensure compatibility with Applebot to tap into a massive audience.

Optimization Tips:

- Include conversational keywords for voice search.

- Add structured data for better discoverability.

- Optimize for local searches with accurate location info.

- Keep content fresh and engaging.

✅ User-Agent: Applebot

✅ User Agent String: Check here

8. AhrefsBot

AhrefsBot is the web crawler used by Ahrefs, a popular SEO toolset. Its main job is to gather data for Ahrefs’ backlink index, SEO analysis, and keyword research tools. Understanding how it works can help you manage its crawling activity on your website effectively.

AhrefsBot crawls the web to collect data on backlinks, website rankings, and overall site performance. It uses a specific user-agent string to identify it.

If you see unusual activity on your server logs, you can check if it’s AhrefsBot by performing a reverse DNS lookup. Authentic requests will be resolved to *.ahrefs.com.

AhrefsBot strictly follows robots.txt rules. This ensures the bot avoids specific pages or directories. If you want to control its access to your site, add directives like:

User-agent: AhrefsBot

Disallow: /private/

AhrefsBot is designed to crawl responsibly. It adjusts its crawl rate based on your server’s performance. If you encounter issues, you can contact Ahrefs support to fine-tune the bot’s crawling behavior.

AhrefsBot powers one of the largest backlink databases available to marketers. If you want accurate insights from Ahrefs’ tools, allowing AhrefsBot to crawl your site can provide valuable data.

This web crawler is a vital part of Ahrefs’ SEO toolset. It gathers critical data to help site owners improve rankings. It also analyzes backlinks and refines their strategies. By managing its access properly, you can make the most of its capabilities while protecting your server resources.

Optimization Tips:

- Regularly audit and clean up backlinks.

- Use internal links to improve site navigation.

- Leverage Ahrefs data to identify improvement areas.

✅ User-Agent: Ahrefsbot

✅ User Agent String: Check here

9. SemrushBot

SemrushBot is the web crawler behind Semrush, one of the leading SEO and marketing tools. It collects data to power Semrush’s features like keyword tracking. It also analyzes backlinks and does competitive research. Knowing how SemrushBot works can help you manage its interaction with your site.

SemrushBot crawls websites to gather information about content, links, and other SEO metrics. It uses a distinct user-agent string. This allows webmasters to identify their activity in server logs.

SemrushBot respects the robots.txt file. This ensures the bot avoids restricted pages or directories. You can use this file to control its crawling behavior. For example, to block SemrushBot from specific parts of your site, add:

User-agent: SemrushBot

Disallow: /example-path/

The web crawler is designed to crawl efficiently without overloading your server. If you suspect unauthorized activity, you can perform a reverse DNS lookup. Authentic SemrushBot requests are resolved at *.semrush.com.

SemrushBot collects critical data that fuels insights for marketers and site owners. By allowing it to crawl your site, you can ensure accurate reporting in Semrush tools. These insights help you monitor backlinks, optimize keywords, and refine your SEO strategies.

SemrushBot is a vital component of Semrush’s platform. It gathers data that enables better decision-making for SEO and digital marketing. Managing its access ensures a balance. It helps leverage its benefits. It also protects your server’s resources.

Optimization Tips:

- Use Semrush to identify high-performing keywords.

- Optimize your site for usability and speed.

- Analyze competitors to improve your strategy.

- Focus on high-quality, relevant content.

✅ User-Agent: SemrushBot

✅ User Agent String: Check here

10. Facebook Crawler

Facebook Crawler updated its name to Meta Web Crawler. Meta’s web crawler is used by Facebook and Instagram to gather metadata from websites. It helps ensure proper previews when content is shared on these platforms. If you’ve seen link previews with titles, images, and descriptions, Meta’s crawler is behind that process.

Meta’s web crawler fetches Open Graph metadata from websites. This metadata is used to generate link previews. It also checks for updates to ensure previews stay accurate and relevant.

You can control how the crawler interacts with your site using the robots.txt file. To block it from specific pages, you can add:

User-agent: facebookexternalhit

Disallow: /example-path/

However, keep in mind that restricting the crawler may prevent proper link previews on Facebook and Instagram. To make the most of Meta’s crawler, include Open Graph tags in your website. These tags define how your content appears when shared.

Key tags include:

- og: title,

- og: description,

- og: image.

Without these, previews may not display correctly.

Meta’s crawler ensures your content looks appealing on social media. A well-designed preview can boost clicks and engagement. By optimizing your site for the crawler, you enhance visibility and user experience on Facebook and Instagram.

Meta’s web crawler plays a crucial role in social sharing. Managing it properly ensures your links stand out and drive traffic effectively.

Optimization Tips:

- Add Open Graph tags to your pages.

- Use high-quality images for previews.

- Test links with Facebook’s Sharing Debugger.

- Don’t block this bot in your robots.txt file.

✅ User-Agent: meta-externalagent/1.1 or meta-externalagent/1.1

✅ User Agent String: Check here

11. Twitterbot

Twitterbot refers to bots that interact with Twitter’s API to automate tasks. These bots can be used for posting updates, engaging with users, or analyzing data. They are powered by Twitter’s API v2, which provides structured access to the platform’s data.

Twitterbots automate repetitive tasks on Twitter. They can post tweets, retweet, like posts, and reply to users. They are also useful for monitoring keywords, hashtags, or mentions. Some bots even perform advanced tasks, like running polls or sending direct messages.

To create a Twitterbot, developers need to use Twitter’s API v2. This involves registering for a developer account and creating a project in the Twitter Developer Portal. The API provides endpoints to retrieve and post data on behalf of a Twitter account.

Bots require authentication via API keys and tokens. These credentials ensure secure access to the account and allow the bot to perform its tasks. Developers often write the bot’s logic using programming languages like Python or JavaScript.

You can control how the crawler interacts with your site using the robots.txt file. To block it from specific pages, you can add:

User-agent: Twitterbot

Disallow: /

Twitter has strict rules for bots. Automated activity must comply with Twitter’s Developer Agreement and Automation Rules. Bots should avoid spammy behavior or violating users’ privacy. For transparency, Twitter encourages labeling accounts as automated.

Twitterbots save time by automating repetitive tasks. Businesses use them to manage social media efficiently. Plus, they monitor trends or provide customer support. They are also popular for creative uses, like generating art, poetry, or jokes.

Optimization Tips:

- Use Twitter Card metadata.

- Optimize images for Twitter’s recommended dimensions.

- Keep titles short and engaging.

- Check previews with Twitter’s Card Validator.

✅ User-Agent: twitterbot

✅ User Agent String: Check here

12. Archive.org Bot

Archive.org Bot is the automated tool used by the Internet Archive to preserve web content. It plays a key role in maintaining the Wayback Machine, one of the largest digital libraries of websites.

The bot crawls websites to capture and archive web pages. It ensures that content is stored for future reference, even if the original site changes or goes offline. This makes it an essential tool for preserving internet history.

Archive.org Bot visits web pages like a regular user. It downloads the HTML, images, and other resources to create a snapshot. These snapshots are stored in the Wayback Machine. The bot follows the rules set in a site’s robots.txt file, respecting webmasters’ preferences.

The bot helps preserve valuable digital content. Researchers, journalists, and historians rely on the archived data. It helps them to track changes or recover lost information. It also aids in verifying content for legal or academic purposes.

You can control its access using your robots.txt file. To block it, add this line:

User-agent: ia_archiver

Disallow: /

However, blocking the bot means your site won’t be archived, potentially losing historical value. The archived snapshots are used by academics, fact-checkers, and everyday users. Businesses also use the Wayback Machine to monitor competitors’ past website changes.

Archive.org Bot is a vital tool for preserving the internet’s history. By archiving web pages, it ensures that information remains accessible for generations to come. Whether to allow or block it depends on your site’s goals.

Optimization Tips:

- Keep key pages accessible.

- Avoid excessive redirects.

- Use descriptive URLs for better archiving.

- Don’t block this bot in robots.txt.

✅ User-Agent: Archivebot

✅ User Agent String: Check here

Specialized and Niche Web Crawlers

Specialized web crawlers perform targeted tasks on websites. Pricing bots scan e-commerce platforms to compare product prices. Media crawlers, like Pinterestbot, collect images to enhance visual platforms. News aggregators gather headlines to provide real-time updates.

Unlike general web crawlers, these bots are designed for specific data extraction. They go beyond indexing for search engines by accessing focused content areas.

Control access to your site using the robots.txt file. This file allows you to permit or block specific bots. To attract media crawlers, ensure images are optimized with proper metadata. For privacy, restrict bots from accessing sensitive data.

Properly managing these crawlers ensures they work in your favor. It prevents overloading your site. It also protects your data.

How to Optimize Your Website for Web Crawlers?

Web crawlers play a key role in indexing and ranking your site. To maximize their effectiveness, your website must be well-organized and easy to navigate.

Boost Crawlability: Use clear navigation menus and an updated XML sitemap. This helps crawlers find your content efficiently.

Focus on Indexing: Add metadata, including page titles and descriptions, to each page. Avoid duplicate content to ensure crawlers focus on unique information.

Manage Crawl Budget: Block low-value pages, such as admin links or duplicate pages, from being crawled. Use internal linking to connect important content, helping crawlers prioritize key pages.

Improve Website Speed: Fast-loading websites rank higher and provide a better user experience. You can use Next3 Offload to optimize and offload your media files to improve website speed.

Use a Robots.txt File: Control how crawlers interact with your site by specifying allowed and disallowed pages.

Implement Sitemap.xml: Help crawlers navigate your website by providing a detailed sitemap

Focus on Mobile-Friendliness: Many crawlers, like Googlebot, prioritize mobile-first indexing.

Ensure Proper Meta Tags: Include accurate meta titles and descriptions to guide crawlers to understand your content.

Regularly Update Content: Fresh content encourages crawlers to revisit your site more frequently.

Optimizing your website for crawlers improves search engine indexing and user experience. Consistent efforts lead to better rankings and increased traffic.

How to Identify Web Crawlers in Your Logs?

Identifying web crawlers in your website log helps you track bot activity. It also helps you understand how crawlers interact with your site. Here’s how to do it:

Use the Right Tools: Start by accessing your server logs. These logs record every visitor to your site. Tools like WhatIsMyUserAgent can help you identify and decode user-agent strings.

Check User-Agent Strings: Look for user-agent strings that belong to crawlers. For example, “Googlebot” or “Bingbot” indicate the presence of search engine crawlers. These crawlers typically identify themselves.

Filter Bot Activity: Refine your filtering to separate bot traffic from real visitors. This ensures your logs show relevant data and helps improve analysis.

By following these steps, you can effectively identify web crawlers in your logs. Plus, you’ll better understand their behavior on your site. These help you optimize site performance and manage bot traffic.

How to Protect Your Website from Malicious Crawlers?

Malicious crawlers can damage your website by stealing data or overloading it with requests. Here’s how to protect your site:

Monitor for Warning Sign: Look for spikes in traffic or unusual patterns in your logs. These could indicate malicious crawler activity. Early detection is key to preventing damage.

Use Defensive Measures: Rate-limiting slows down bots by limiting the number of requests from a specific IP. CAPTCHAs ensure that only humans can access certain parts of your site. Security tools like Cloudflare provide an extra layer of protection against harmful crawlers.

Protect Your Data: By implementing these defenses, you can block malicious crawlers and keep your website secure. This prevents data theft and ensures a smooth experience for your legitimate visitors.

Protecting your site from malicious crawlers is crucial. Implement these steps to safeguard your website and your data.

Tools to Monitor and Manage Web Crawlers

Web crawlers constantly scan your website. Some are beneficial, while others may cause issues. Here’s how to manage them:

Google Search Console: It helps you track who visits your site. It also ensures search engines can crawl your site without problems.

Screaming Frog: This tool shows how crawlers see your site. It helps you identify issues that may affect crawlability.

Sucuri: It protects your site from malicious bots and unwanted visitors. This adds an extra layer of security.

Using these tools will help you monitor, manage, and protect your site. They ensure smooth crawling and keep malicious bots away. With the right tools, you can maintain control over your site and improve user experience.

Wrapping Up

So, that was our list of the 12 Most Common Web Crawlers List. If you liked it, let us know in the comments.

Web crawlers play a crucial role in the online ecosystem. They gather and index content, helping your site appear in search results. However, if not managed properly, they can slow down your site or cause issues.

Start by analyzing your server logs. Identifying crawling problems early helps you optimize your site’s performance. This prevents unnecessary load and ensures smooth operation.

To keep your site running efficiently, begin with the basics. Proper management of web crawlers leads to better site performance and user experience. Start today, and your website will benefit in the long run.

You May Read Also

- Top 27 must have WordPress plugins for every website

- How To Optimize WordPress Website Speed

- How to make a WordPress website in 2024 (Step by Step)

- How to Build a WooCommerce WordPress Website

- Semantic SEO: Everything You Need to Know

FAQs on Web Crawlers

1. How do web crawlers impact SEO?

Web crawlers play a huge role in SEO by indexing your website’s content. The better they crawl and index your pages, the higher the chance your site will appear in search engine results. It improves your site’s visibility and organic traffic.

2. Are all web crawlers safe?

Not all crawlers are safe. Some malicious bots can harm your site by scraping content, overloading your server, or causing security risks. It’s important to block harmful crawlers and monitor your site’s traffic.

3. What is the difference between a bot and a web crawler?

A web crawler is a type of bot that automatically browses the web to index content for search engines. While bots can serve different purposes. Crawlers specifically gather data to help improve search rankings.

4. How can I prevent unwanted web crawlers from accessing my site?

You can block unwanted web crawlers using a robots.txt file, and CAPTCHAs. Or you can use bot protection services like Cloudflare. Bot protection service helps to manage which bots can access your content.

5. What are the benefits of web crawlers for my website?

Web crawlers help your website get discovered by search engines. It also improves your site’s ranking and makes it easier for people to find your content online. Without them, your site would remain invisible to search engines.

6. How can I optimize my site for web crawlers?

Ensure your site has clear navigation, proper metadata, and no broken links. Use XML sitemaps and avoid duplicate content. Make sure your pages load quickly to help crawlers index your site more efficiently.